3DJ

|

Overview

3DJ (working title) is a project I worked on for my electronic music independent study course (Music 590i) at Iowa State University. It uses the H3D API for haptic (touch) and stereo graphic rendering, Reaktor as the electronic synthesizer, and a Phantom Desktop with ReachIn display as the hardware.

3DJ uses the OpenSoundControl (OSC) to handle the communication between my application running under the H3D engine and Reaktor to create music based on the current scene. it can be thought of like a virtual record player with the haptic device acting as the needle only instead of playing pre-recorded music, based on several parameters of the object.

Project Goal

The goal of this project was to turn 3D graphical objects into real-time music using a haptic pen and an electronic synthesizer. The most important part was properly mapping the object properties to sound properties, more so than the creation of a complicated synthesizer design. For example, speed of the objects rotation is mapped to the frequency of the modulator; the roundness of the object is mapped to the carrier wave; etc.

Key Components

H3D

|

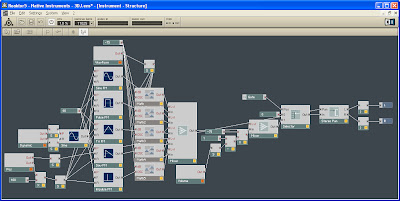

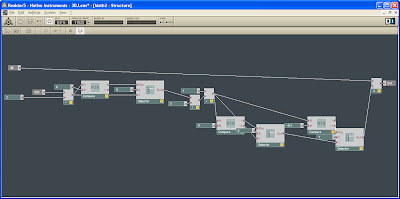

H3D is a scene graph API that not only allows you to define the graphical object but also the haptic properties of the object. It uses X3D (an XML based, open source scene graph language) to define the various objects in the scene. C++ is used as a back end to handle the actual rendering of objects (both the graphics and the haptics). The last component of H3D uses Python as a the scripting language component to handle components that don't require as much speed (sending messages to Reaktor, handling the dynamic transform, etc.) The build of the H3D API I used for this project had been previously modified as part of my Haptic Surface Manipulation System (HASM). This included modifications I used in 3DJ, which includes the ability to use non-visible textures, which I then use to change the friction on the fly to encourage users to stick to the "grooves" of the object. I also looked into using another texture where the HSL (hue, saturation, and lightness) values would be piped into Reaktor to control additional sound properties, but I ended up deciding against this approach after several experiments with different instrument designs. I also made several tweaks to the code for the 3DJ project in order to obtain slightly more information about the object for use in Python and to reduce the maximum amount of friction.

Reaktor

I chose to use Reaktor as the electronic synthesizer portion of this project due to having previous experience with it as well as the fact that it provides a graphical interface that allowed for quick and easy manipulation of the instrument design. However, Reaktor also has two major drawbacks in regards to OSC: it is terribly documented and it does not have a full implementation of the OSC protocol. Thus, the first few weeks of my time on the 3DJ project were spent learning OSC (which I had never used before) as well as researching how Reaktor uses OSC and the limitations of Reaktor's implementation of OSC. For a brief write-up describing how to set up Reaktor to receive OSC messages from a custom built program, then please read this.

Instrument Design/Mappings

|

As stated, the goal of this project was to create fitting mappings between the graphical scene and the synthesizer design. The y-position of the haptic device relative to the top and bottom (north and south poles) of the object is mapped to the amplitude of the carrier oscillator, so that the highest amplitude is at the vertical center of the object. I had considered mapping height to pitch, as opposed to amplitude; however, I felt that amplitude fit the mapping better since the object is almost always tapered at the ends (implying less of the object) and fatter in the middle (implying more of the object), which I felt mapped logically to amplitude. The horizontal position of the pen is mapped to the stereo pan, so that the further the pen is from the center the further the sound is panned to the appropriate side.

The properties of the dynamic transform, which is restricted to only rotate the object around the y-axis, are mapped to the pitch and amplitude of the modulator. The speed of rotation is mapped to the pitch of the modulator since speed and frequency are, at the base level, the same concept. The force being applied to the object and causing the transform is mapped to the amplitude because I felt force mapped well to the concept of intensity that amplitude represents. The pitch of the carrier oscillator is set to 60 (middle C) in order to make the manipulations by the modulator more obvious.

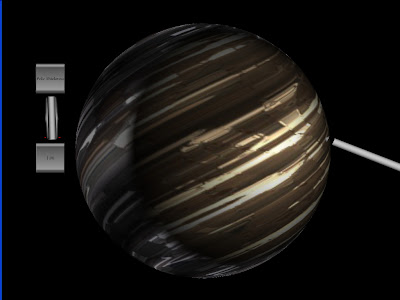

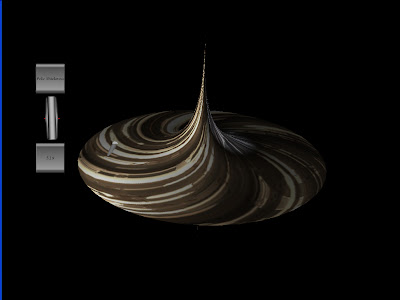

The thickness of the north-south poles (the n value of the super quadric formula) is mapped to choosing the waveform (sine, pulse, triangle, sawtooth, or impulse wave). The n value maps to the amount each waveform is heard by setting the level in the mixer module. The sine wave is audible from 0.5 to 3 as this is when the object is roundest. The pule wave is mapped from 2 to 5 as this is when the object begins to form a bit of an edge, but is still a fairly simple shape. From 4 to 7 the triangle wave takes over as this is when the object tends to end with a point and be more spindle like. The sawtooth is from 6 to 9 as this is when the object begins to have a straight up protrusion, which is similar to the sudden peek of the sawtooth wave. Finally, an n value from 8 to 10 is mapped to the impulse wave as this is where the object is completely flat with just a straight pole along the y-axis as this mimics the shape of the impulse wave. All of these mappings are handled by the math modules.

|

The force being applied to the haptic device (either through friction or just from force pushing back when the user is touching the object) is mapped to the final volume level. This is done because, once again, force seems to be a logical mapping to volume/amplitude. The gate is set by whether or not the object is being touched since I did not want the object producing sounds while not being touched.

I had also considered using the pen orientation/angle and mapping it to an envelope or another sound property; however, after some experimentation the mapping neither seemed obvious nor practical so I decided to cut this from the instrument. There were a few instrument designs I played with, but ultimately this set up proved to make the most sense given the objects properties.

Hardware

Aside from the computer we used to run 3DJ, I used a Phantom Desktop as the input device as well as handling the haptic force rendering. In addition, I used a ReachIn display to provide a more natural method of using the Phantom to interact with the 3D objects.

Future Work

In the future, the work done with 3DJ will be applied to the HASM project in order to provide real-time sound interpretation of the geographic data. Currently HASM relies on MIDI to provide audio feedback, which allows for far fewer ways of providing audio feedback to users than an electronic synthesizer and the OSC protocol.